June 18, 2024

Public-facing AI: 5 tips to overcome generative AI skepticism

Our research finds that consumers are skeptical of AI; however, they’re willing to give it a try—if you lead by example and show them the benefits.

Few technologies generate as much interest today as generative artificial intelligence—and rightly so. In our recent New work, new world research report, we found that the technology will boost US productivity anywhere between $477 billion and $1 trillion over the next decade.

In these early days, the conversation around generative AI has centered on balancing economic gains with the technology’s impact on humans, and employees in particular. But as generative AI matures and its use cases expand, businesses will inevitably use it in consumer-facing situations in which trust will become the major issue. (For more, see our report Building consumer trust in AI).

The rapid rise of ChatGPT, to take the best-known example, and image generators has coincided with growing concern around misuse and user manipulation. Chatbots are riddled with unflattering outcomes, while scammers have used AI-generated audio deepfakes to flood social media with fraudulent investment opportunities and other rip-offs. Air Canada, to name just one business, introduced AI-based customer support—but pulled it down after the tech simply lied to a customer about refund policies.

Trust is crucial during the introduction of any new technology; for generative AI, which has the potential to assist our family physicians, our financial planners and our airline pilots, this is especially true. As enterprises move cautiously to extend large language models (LLMs) into the consumer space, they face myriad challenges.

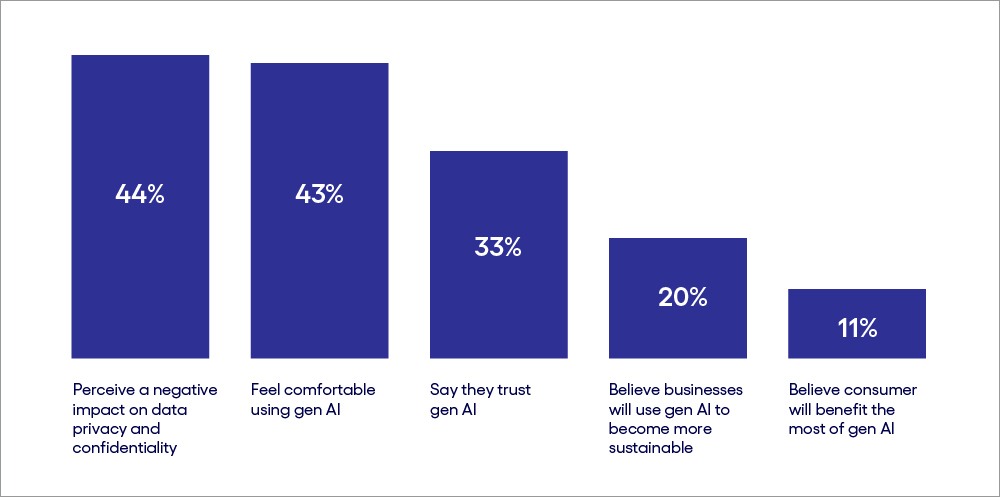

To explore consumers’ trust and skepticism in generative AI, we conducted a survey of 1,000 US adults. The results were eye-opening, showing that business has much work to do in this area (see Figure 1). In this report, we’ll focus on steps enterprises should take as they introduce consumer-facing products built on generative AI.

Base: 1,000 US consumers

Source: Cognizant Research Trust Survey

Figure 1

People are loathe to cede control to generative AI

Consumers have strong positive feelings about generative AI in certain settings. For example, 70% believe generative AI will make other technologies easier to use—surely, this is a belief businesses should leverage as they roll out LLMs.

However, only 41% are comfortable using generative AI tools at work. In the personal space, it’s even less popular. Just 31% are comfortable using generative AI when they’re alone, and 13% when they’re with their families. What’s driving this AI skepticism? Are people embarrassed to make use of generative AI? Does it feel like cheating? At work, do they fear they’ll be disciplined for allowing software to ease their workload? These are sensitivities enterprises must learn more about—and overcome.

Our survey also found that an overwhelming majority of consumers prioritize human control over critical daily activities, including education, work and decision making. For instance, just 19% are comfortable with generative AI-led education for their children. Additionally, just 25% believe generative AI will make it easier for humans to think critically, and 66% believe generative AI will devalue creativity by generating too much content.

In other words, consumers don’t want to see themselves as simply handing over control of their lives. This means that as organizations present generative AI to the public, they must position it as an aide, an assistant, rather than an entity that does all the work. Microsoft’s Copilot (emphasis ours) is a good example of such positioning; the software is not flying the plane, but rather serving as a trusty backup. Similarly, on-device AI is gaining traction among smartphone manufacturers as it offers greater privacy, security and control to users.

Business should take charge of communication

For businesses, one of the most positive takeaways from our survey is the clear link between familiarity with generative AI and enthusiasm. Of those who are very familiar with the technology, 70% say they're enthusiastic about it. Among those who know it by name only, that figure drops to 32%. We also found that large technology companies are the second most common source of information on generative AI (following only social media companies), and that people who learn about generative AI from tech companies are more likely than others to view it positively. Taken together, these findings show that companies seeking to increase consumer trust in the technology must take the bull by the horns when it comes to communication, continuously and tirelessly explaining its ability to improve lives.

So, what can businesses do right now to ensure that their future offerings are in line with consumer expectations? We offer five tips on moving forward.

Tackling AI skepticism, distrust and uncertainty

There’s no set path for businesses to overcome consumer distrust and skepticism over generative AI. Instead, they need to take the arduous route of carefully curating their AI offerings as they go along, much like building a plane while flying it. Here are some considerations that will help business leaders focus their efforts.

- Build the machinery of trust.

Building consumer trust should be the North Star for businesses. Generative AI must have explainability built in from the start—that is, both the model and its output must be interpretable to consumers. When people can easily see the sources of the output, skepticism gives way to trust. That trust grows over time, and thus consumers eventually feel less compelled to check sources.

- Don’t move fast or break things for your customers.

Companies taking a wait-and-watch approach with generative AI risk falling behind. But the “move fast and break things” approach embraced by tech companies over the past decade isn’t well-suited either. Creating customer offerings without thoroughly integrating generative AI with existing systems could create unsavory user experiences. It’s wiser to conduct appropriate R&D and testing to ensure customer offerings align with the organization’s AI-readiness and deliver a robust experience.

- Think simple, be tactful.

Generative AI may change everything eventually—but there’s no harm in starting small and tracking customer response to changes. Incremental improvements to existing offerings can unlock hidden value with minimal risk. For instance, a generative AI-powered tool that uses customer data to create personalized offerings could provide early success, while a customer-facing autonomous chatbot (like Air Canada’s aforementioned debacle) may be an embarrassing setback. Tactfully deploying human oversight will be key to unlocking early value from generative AI.

- Embrace regulation.

Policymakers, politicians, academics and AI makers have all called for legal guardrails for generative AI. Today’s regulatory environment isn’t easy to navigate, but the European Union’s new AI Act is taking hold and may serve as a model for other nations. A human- and governance-centric approach to AI, driven by external expertise and organizational objectives, will help organizations avoid pitfalls. Importantly, our research shows that oversight will go a long way in bridging the trust gap. While specific responses varied, most in our survey are in favor of some sort of controls for those offering generative AI: a code of conduct, regulation, transparent sourcing, etc.

- Lead the way on communication.

As noted earlier, our research found that the more people know about generative AI, the more enthusiastic they are. We also found that many of the least enthusiastic get their information about generative AI from legacy news sources. It’s incumbent on businesses to ensure their message about the technology’s benefits reaches consumers across all media. Meanwhile, they must practice what they preach about ethics and governance, slowly earning consumer trust. By talking the talk and walking the walk, companies can gradually turn the dial from fear-centric to a human-centric view.

Today, there is a window of opportunity for businesses to establish a foundation of trust with their customers as their AI adoption matures. We believe building this foundation should be a priority for businesses, as it not only addresses AI skepticism among customers but also gives executives the right tools with which to unlock generative AI value.

For more information, visit our report on Building consumer trust in AI.

Akhil is a Manager in Cognizant's thought leadership team. Through insightful articles, blogs and infographics he traces technologies and forces that are constantly reshaping industries, their impact and how businesses can adapt.

Latest posts

Related posts

Get actionable business Insights in your inbox

Sign up for the Cognizant newsletter to gain actionable AI advice and real-world business insights delivered to your inbox every month.