Turning potential to profit: building consumer trust in AI

<p><br> <span class="small">June 18, 2024</span></p>

Turning potential to profit: building consumer trust in AI

<p><b>Our research reveals widespread mistrust regarding generative AI’s impact on economic, technological and societal interests. By shoring up trust in AI, businesses can discover new opportunities to win consumers’ hearts and minds.</b></p>

<p>Our economic system relies on one simple thing: trust. It underpins our entire monetary system. It’s the reason we pick one product over another. For businesses, trust is hard to gain and easy to lose.</p> <p>For a technology as new as generative artificial intelligence, trust plays an outsized role in public acceptance. Although the buzz around OpenAI’s ChatGPT may suggest that the battle for trust has been won, the reality for businesses launching generative AI-enhanced products is less optimistic.</p>

<p>In fact, consumers are generally skeptical about the technology. In our recent survey, only one-third of consumers trust generative AI. The rest have serious questions about it.</p> <p>To understand consumer perceptions of generative AI, we surveyed 1,000 consumers in the US and identified three areas where mistrust is prevalent:</p>

<ol> <li>Economic security and well-being</li> <li>The technology’s own innerworkings </li> <li>Societal impacts </li> </ol> <p>The prevailing belief seems to be that while generative AI will increase corporate profits, its benefits won’t extend to employees or to society. Many find the technology mysterious, so they lack confidence in its decisions.</p> <p>However, our survey also shows that the more people understand generative AI and its safeguards, the more excited they become. Moreover, when you explain potential benefits, enthusiasm grows.</p> <p>Most businesses are unwilling to risk losing consumer trust. While senior leaders must move quickly from pilot to production, in this case, they cannot afford to break things. Instead, businesses that understand major customer concerns can deploy generative AI to build and maintain trust. To help them do so, we’ve identified the three main areas of consumer mistrust and made recommendations on how to assuage them.</p>

<h5><span style="font-weight: normal;"><span class="text-bold-italic">1.</span> Consumer fears about their economic well-being diminish their trust in generative AI</span></h5>

<p>The job market is a major area where consumers lack trust in generative AI. And they’re not wrong to be concerned: our prior research on the economic impact of generative AI “<a href="https://www.cognizant.com/us/en/insights/insights-blog/generative-ai-productivity-wf2343151" target="_blank" rel="noopener noreferrer">New work, new world</a>”, finds that employment will be affected in many ways.</p> <p>In that report we found that within the next decade, a staggering 90% of jobs could be disrupted to some degree by generative AI.</p>

<p>While change will be felt everywhere, the white-collar workforce is most vulnerable. Some roles could see up to 85% of their tasks disrupted. Even those less affected will see the technology encroach on their work.</p> <p>Data scientists, for example, could see 70% of their work change over the next decade. Financial analysts can expect more than half their tasks to shift over time.</p>

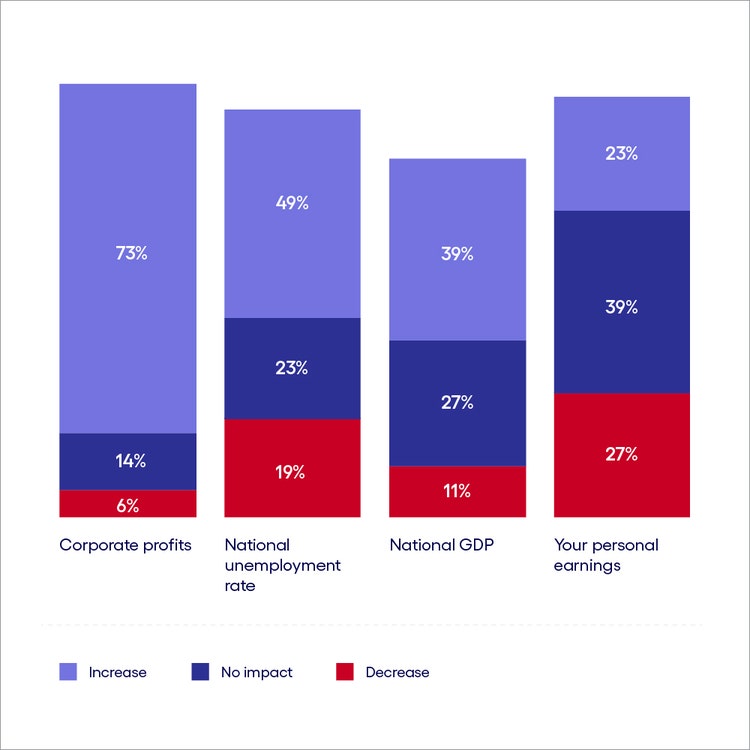

<p><br> Consumers are undecided about whether this change will be positive or negative. In our trust survey, 55% say generative AI will have no impact or a negative impact on employment opportunities and 49% believe national unemployment will increase (see Figure 1).</p> <p>Businesses, meanwhile, will fare far better, consumers contend. Seventy-three percent of survey respondents believe the economic gains of generative AI will boost corporate profits; however, only 23% think they’ll see a share of the benefits, and 27% expect their personal earnings to decrease (see Figure 1).</p>

<p><b>Consumers equate generative AI with personal economic loss</b></p> <p>Q: What impact, if any, do you think the emergence of generative AI will have on the following areas?</p>

#

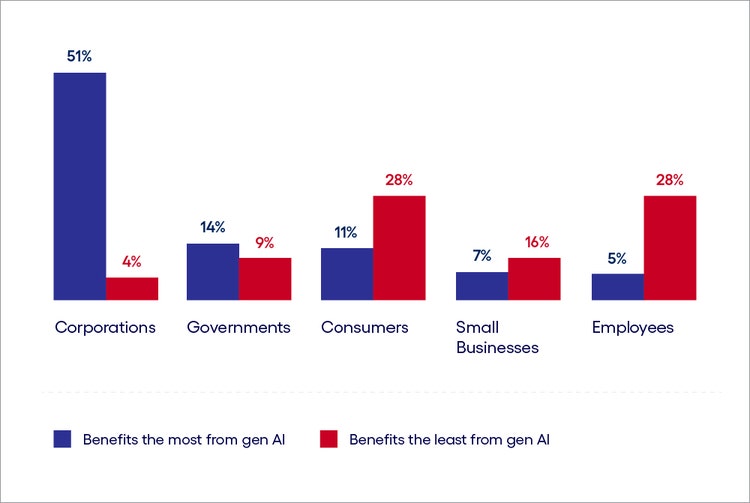

<p><span class="small">Base: 1,000 US consumers<br> Source: Cognizant Research Trust Survey<br> Figure 1 </span></p> <p>In the minds of consumers, the fruits of generative AI’s progress will benefit a select few at the expense of many. Indeed, 51% think corporations will take the lion’s share of the benefits, compared with just 11% for consumers and only 5% for employees (see Figure 2).</p> <p><b>Businesses gain while consumers lose</b></p> <p>Q: Who will benefit the most from generative AI? Who will benefit the least?</p>

#

<p><span class="small">Base: 1,000 US consumers<br> Source: Cognizant Research<br> Figure 2</span></p> <p>A big reason for this outlook is that people have figured out the generative AI/worker productivity equation. Our analysis in “<a href="https://www.cognizant.com/us/en/gen-ai-economic-model-oxford-economics" target="_blank" rel="noopener noreferrer">New work, new world</a>"<i> </i>finds<i> </i>labor productivity could increase as much as 10% annually by 2032. <a href="https://mitsloan.mit.edu/ideas-made-to-matter/how-generative-ai-can-boost-highly-skilled-workers-productivity" target="_blank" rel="noopener noreferrer">MIT reckons</a> workers who use generative AI correctly will improve their performance by as much as 40%.</p> <p>With companies lauding the productivity benefits of generative AI, employees will expect a share of those gains and will rapidly become disengaged—or switch employers—when they don’t receive them.</p>

<p>Consumers, too, will expect a fair share of the proceeds—whether in the form of cheaper products or improved services. There’s little tolerance for companies boosting profits without making commensurate improvements. Take recent increases in energy prices as an example—when consumers saw those same companies subsequently posting record profits, it fueled a variety of activities: aggressive energy saving plans, switching to competitors, lobbying governments to implement new tax regimes or <a href="https://www.euronews.com/green/2022/09/14/dont-pay-why-are-brits-boycotting-energy-payments-and-what-are-the-consequences" target="_blank" rel="noopener noreferrer">just outright refusals to pay</a>.</p>

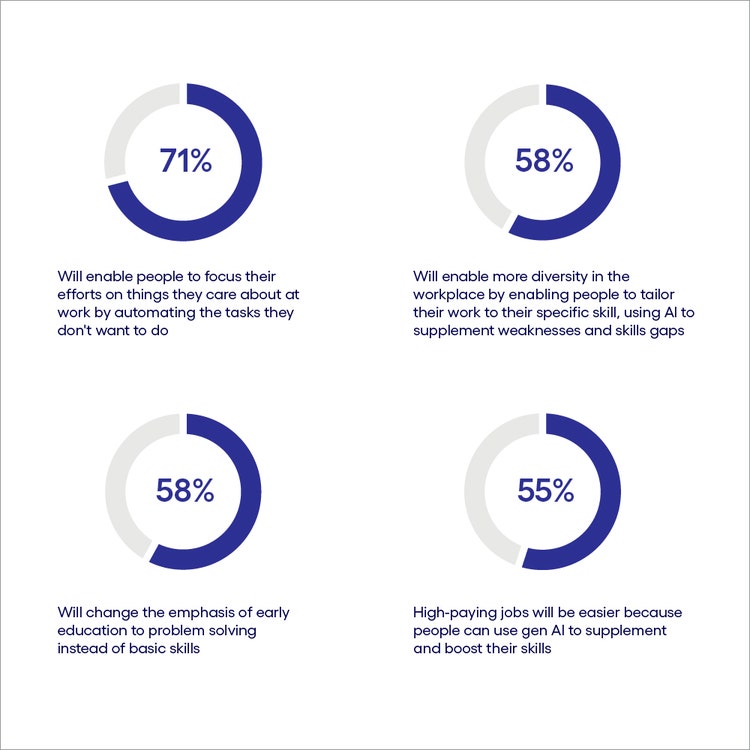

<p><br> While brand boycotts are at the more extreme end of the spectrum, today’s consumers will happily walk away when they feel their trust has been broken.</p> <h4>What to do about it: Widen the lens where gains will be realized to build trust in AI</h4> <p>Businesses need to reassure consumers and employees by communicating how expected productivity gains will benefit the wider community. Whether that’s increased wages, shorter working periods, or developing more attractive commercial propositions to consumers, the goal is to break the perception that only businesses win.</p> <p>Leaders must also be clear about how they plan to use generative AI—particularly where it will impact employees and how the company will manage that impact. This includes upskilling plans, clear guidelines for appropriate use and education on how the technology will affect certain business activities.</p> <p>Organizations need to go beyond articulating only the commercial benefits of generative AI. They must integrate the benefits of generative AI throughout their organizations. Fair practices, reskilling initiatives, employee-centric policies, and even commitment to ethical causes can all help undo the notion that only businesses stand to gain from the technology.</p> <p>Education and communication are key. In our survey, consumers were far more likely to embrace how generative AI would benefit themselves and society when it was explained to them. For instance, a majority of respondents responded positively to the impact of generative AI on work, workplace diversity, education and salaries when given an explanation of what generative AI could do in each area (see Figure 3).</p> <p><b>Connecting the dots</b></p> <p>Consumers recognize gen AI benefits when they’re clearly explained.</p>

#

<p><span class="small">Base: 1,000 US consumers<br> Source: Cognizant Research Trust Survey<br> Figure 3</span></p>

<h5><span class="text-bold-italic">2.</span> Consumer concerns about how the technology works diminish their trust in generative AI</h5>

<p>Coming to grips with generative AI’s technical complexities is another significant source of unease among consumers. “I’ve seen how positive AI can be, but I’ve also seen how scary and dangerous it can be,” one younger consumer said. “I don’t understand it, and that makes it hard for me to trust it,” chimed in one older respondent.<br /> </p> <p>Overall, 44% of survey respondents fear generative AI will compromise data privacy and confidentiality. As more data is collected about every minute of every person’s day, the proliferation of data used to power AI algorithms threatens to blur the lines of personal privacy. This fear is not unfounded, especially since large language models (LLMs) lack a delete button—there’s no simple way for them to unlearn certain information. In a world where some privacy regulations support an individual’s right to be forgotten, LLMs pose significant privacy issues.</p>

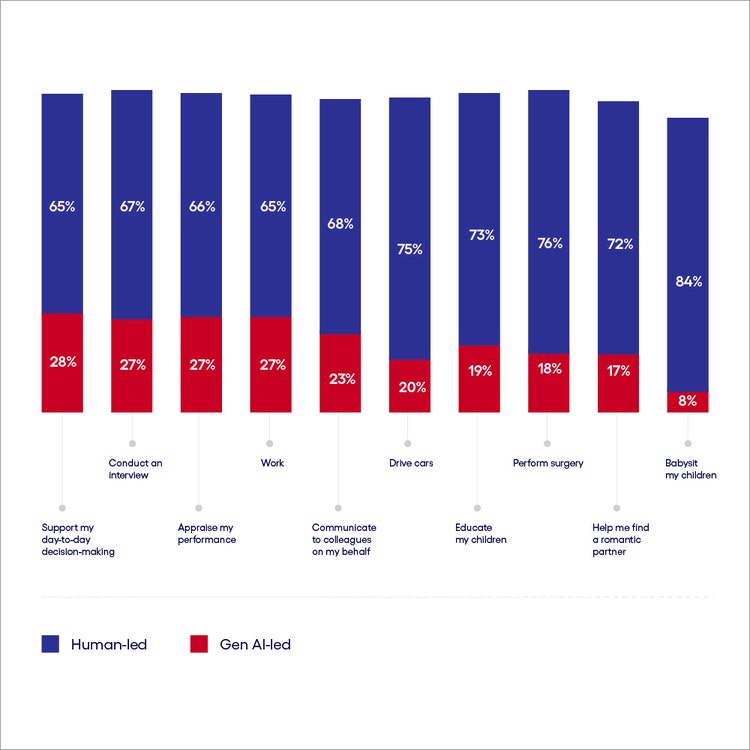

<p>It’s no surprise then, that consumers are cautious around the use of generative AI in a wide range of contexts—whether that unease stems from data privacy concerns or the reliability of outputs. When asked whether they would prefer an activity to be led by a human or by AI, the majority give AI the cold shoulder. For example, 84% prefer babysitting to remain a human-led activity, 75% want humans to be in charge of driving, and 76% want their surgeon to be a human being (see Figure 4).</p>

<p><b>People prefer people</b></p> <p>Q: Would you prefer the following activities to be human-led or gen AI-led?</p>

#

<p><span class="small">Base: 1,000 US consumers<br> Source: Cognizant Research Trust Survey<br> Figure 4</span></p>

<p>What the technology does with the data it gathers—as well as the output it generates from that data—are also under scrutiny. Since these models are trained on vast datasets created by humans, they run the risk of absorbing biases present in that data, perpetuating stereotypes or discrimination.</p> <p>One <a href="https://www.bloomberg.com/graphics/2023-generative-ai-bias/" target="_blank">study</a> found that in images created by Stable Diffusion, a text-to-image AI app, people with lighter skin tones dominated the picture sets created for high-paying jobs, while subjects with darker skin tones were more frequently produced by prompts like "fast-food worker" and "social worker.” And <a href="https://www.wsj.com/tech/ai/google-restricts-ai-images-amid-outcry-over-chatbots-treatment-of-race-34954fb6" target="_blank">Google </a>faced a PR disaster when its chatbot depicted people of color in Nazi uniforms, showing that overcoming stereotypes without resorting to misinformation is no easy feat.</p>

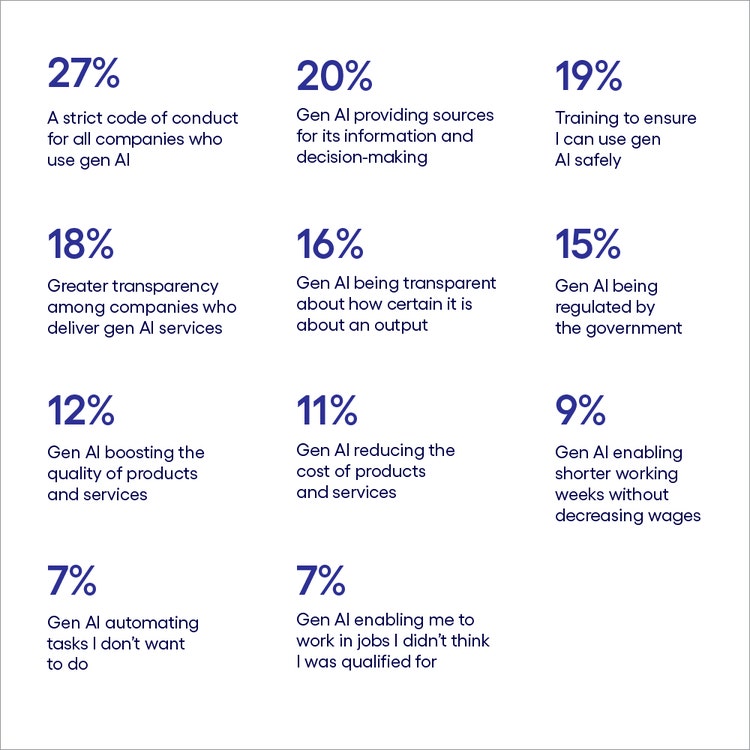

<p><br> Such cases fuel further distrust among consumers; in our study, 42% say generative AI makes them less likely to trust the authenticity of videos they find online, and 40% say it makes them doubtful of whether someone they meet online is human or AI.</p> <h4>What to do about it: Demystify how generative AI works to build trust in AI</h4> <p>Companies must be clearer about their generative AI programs’ inputs and outputs. They must show in concrete terms what data goes into their LLMs’ training and how generative AI decisions are made. Further, they should clearly label AI-generated products and images or allow customers to opt in or out of AI-powered features and services.</p> <p>These measures will help businesses avoid controversies like the one <a href="https://futurism.com/the-byte/netflix-what-jennifer-did-true-crime-ai-photos" target="_blank" rel="noopener noreferrer">Netflix experienced</a> recently. The media giant was accused of using AI-generated images in a true-crime documentary, with fans arguing that the images introduced falsity into the historical record.</p> <p>To further bolster trust, businesses must implement robust mechanisms for verifying the accuracy of AI-generated outputs. This means clearly labeling the sources of data used to create an output and providing context about its origins and potential limitations.</p> <p><b>Strict codes of conduct and providing sources would make consumers trust gen AI more</b></p> <p>Q: Which of the following would make you trust gen AI more? (Select 2)</p>

#

<p><span class="small">Base: 1,000 US consumers<br> Source: Cognizant Research Trust Survey<br> Figure 5</span></p> <p>It’s also crucial to incorporate uncertainty modeling—probabilistic predictions of an LLM’s confidence in its accuracy. By enabling their AI to express these confidence levels, businesses can highlight areas where human oversight or additional data verification may be necessary. People will trust generative AI more if businesses acknowledge its potential for fallibility and make clear that guardrails have been built in to correct errors.</p> <p>Generative AI can itself be a potent tool for quality control and transparency. Companies can train additional AI models to cross-check outputs, identifying potential inconsistencies, biases or factual errors. This multilayered approach not only increases the reliability of results but also makes the verification process more transparent. By openly demonstrating their commitment to self-auditing and using the power of AI for accountability, businesses can build a trust-based relationship with consumers.</p>

<h5><span class="text-bold-italic">3.</span> Consumer misgivings about societal repercussions diminish their trust in generative AI</h5>

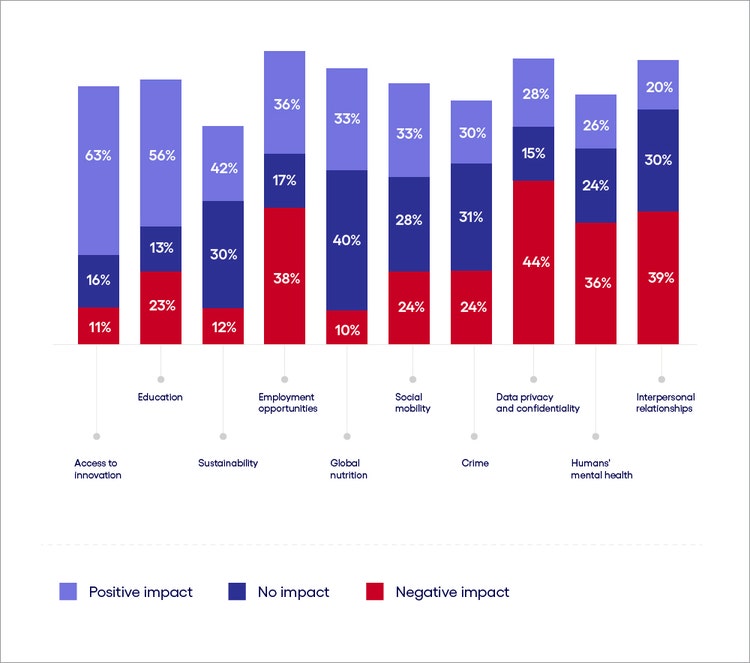

<p>Our study reveals deep distrust regarding AI’s potential societal impacts—albeit with some bright spots (see Figure 6). In addition to concerns about employment opportunities and data privacy, as noted above, the other big issues consumers point to are mental health and interpersonal relationships. At the same time, respondents also see opportunities for generative AI to positively impact education, access to innovation and sustainability (see Figure 6).<br /> </p> <p>Even outside the AI realm, brand impact on consumer well-being is going under the microscope. Some brands, such as <a href="https://www.thedrum.com/open-mic/lush-abandons-social-media-is-it-the-right-decision" target="_blank" rel="noopener noreferrer">British cosmetics retailer Lush, have boycotted social media</a> because of the perceived impact it has on the mental health of their consumers. At the other end of the spectrum, some companies have been mired in controversy when their advertisements popped up alongside problematic content. Last year, at least <a href="https://edition.cnn.com/2023/08/16/tech/x-ads-pro-nazi-account-brand-safety/index.html" target="_blank" rel="noopener noreferrer">two brands announced suspending advertising on X</a>, formerly known as Twitter, after their ads were run on an account promoting fascism.</p> <p><b>A wide range of social concerns</b></p> <p>Q: What type of impact do you believe gen AI will have on the following?</p>

#

<p><span class="small">Base: 1,000 US consumers<br> Source: Cognizant Research Trust Survey<br> Figure 6</span></p> <h4>What to do about it: Leverage the energy of enthusiastic consumers to build trust in AI</h4> <p>The challenge ahead is two-fold: building greater consumer consensus, and sensitive experimentation with the technology. In the case of the latter, businesses must build frameworks into their adoption of the technology that examine both the short- and long-term implications of their actions. While there are rarely strict moral frameworks available—and certainly not one with sweeping consensus—the reality for a technology as nascent as this is that businesses will learn, and so will the world around them. The ability to analyze and, where necessary, reverse actions that they deem too damaging to well-being is a vital part of experimentation.</p>

<p>This is the same approach businesses would use to ensure commercial success: experimenting wisely to limit or reverse any damage and ensure innovations are commercially viable.</p> <p>To foster greater consumer awareness, according to our research, leaders would be wise to focus on young adults, who exhibit a deeper familiarity and greater trust in emerging technologies.</p>

<p><br> Our study highlights a significant generational divide: 54% of younger adults (ages 25–39) demonstrate heightened comfort and confidence in the capabilities of generative AI, while only 23% of those aged 65+ can say the same.</p> <p>By tapping into the knowledge, perspectives and digital fluency of the younger demographic, leaders can infuse fresh energy and insights into the progression of generative AI within society. Empowering the younger generation to actively participate in shaping the trajectory of generative AI will foster a sense of ownership and investment in its development.</p> <h4>Trust in business is trust in AI</h4> <p>Consumers have spoken, and businesses need to listen. So, while businesses must move fast, it’s clear that with something as fragile as consumer trust, we’ve left the era where it’s OK to break things.</p> <p>The trustworthiness of generative AI is still being debated in the public square—and those judgments will extend to how businesses choose to use it.</p> <p>The imperative is crystal clear: prioritize trust-building efforts in the key areas that hit consumers where it counts most—their economic viability, their confidence in a new and mysterious technology and the health of the society they live in.</p> <p>This is the way forward—and an entirely new opportunity to recreate the brand as one that is trusted not just because of how it does business but also because of its innovative, transparent and responsible use of generative AI.</p>

<p>Ramona Balaratnam is a Manager in Cognizant Research. With extensive experience in the Consulting industry, she delves into strategic research to uncover innovative market insights and analyze their impact across industries and businesses.</p>